Large Language Models (LLMs) are advanced AI systems trained on vast datasets to understand and generate human-like text. These models can assist in content creation, language translation, and even complex problem-solving. Hugging Face, a leader in open-source AI provides a powerful platform for accessing LLMs. It also has an extensive model repository and easy-to-use tools, making it a go-to tool for developers.

APIs (Application Programming Interfaces) allow applications to communicate with each other, and access powerful AI models without complex setups. Hugging Face makes it simple to work with LLMs by offering free API access to many models. This empowers users to build applications, perform NLP tasks, and explore generative AI capabilities directly in Python or other programming environments.

In this tutorial, you’ll learn how to begin with Hugging Face and make API requests to interact with LLMs using Python. We will implement a small example to understand how to elevate your projects with state-of-the-art language capabilities. Let’s dive in!

What is Hugging Face(🤗)?

Hugging Face is an open-source AI repository, that is built on the concept of git. One can host an open-source pre-trained LLM, and we can use it directly or fine-tune it based on our requirements and host the updated model. It has revolutionised the game of Gen-AI. Now we don’t need to start from scratch for training an LLM, this makes the project lifetime shorter.

Hugging Face also has public datasets for training, community forums for collaborating and several open-source tools to enhance deployability and scalability. Their motto is to democratise AI and make it available to all.

How to use Hugging Face

We will broadly divide it into four steps

- Create a Hugging Face account

- Create a new Access Token key in Hugging Face

- Choose a foundation model based on the purpose

- Use the model

If you already have a Hugging Face account and Access Token, feel free to skip those steps.

Create a Hugging Face account

It is an open-source tool for learning and personal development, but not for commercial purposes. Hence it is mandatory to create an account and a personal access token (to authorisation requests made by you) for using Hugging Face.

Free accounts will have certain limitations based on request limits per hour, storage or computational limitations etc. For learning purposes, it would be more than enough, but if you want to deploy in a commercial setting, then go for a pricing plan. In either case, you need to have an account.

- Go to their website here

- Click on Sign Up in the Top right Corner

- Give your mail ID and set a password

- Next complete your profile(Only Unique user name and Full name are mandatory fields)

- Select your Avatar or skip it

- Create your account and confirm your email

Voila! Your 1st step to Leveraging the power of AI is done.

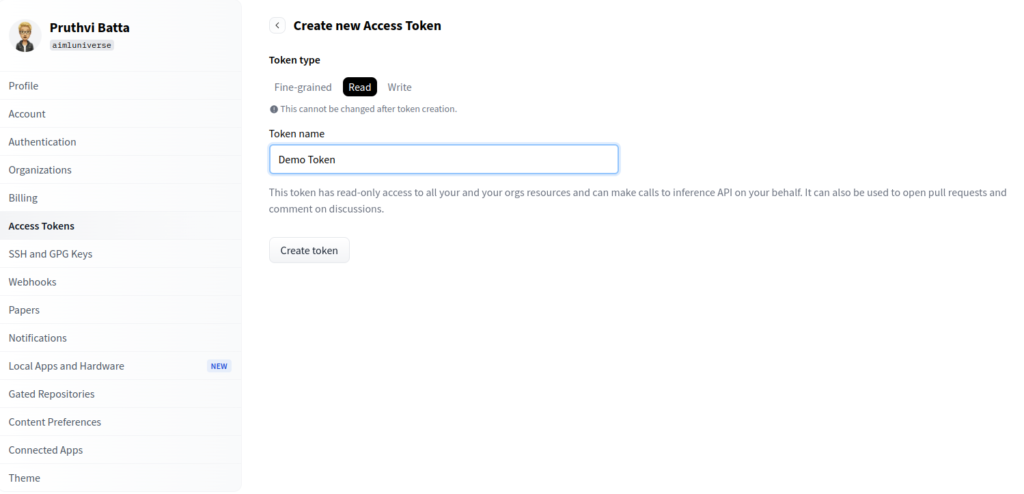

Create a New Access Token in Hugging Face

An access token is like an identifier or authenticator for your hugging face account. Similar to git, suppose multiple people are working on an LLM, then using an access token you can provide different levels of access to different users. It also helps keep away other people from accessing or editing your models, thus keeping your work safe.

Hugging Face Access token or of three types:

- Read: Gives read access to all owned resources and can make inference calls(API calls to LLMs). Users with this token can also raise pull requests while working on a collaborative project and comment on discussions.

- Write: This access type has additional write access to all resources along with everything in read.

- Fine-grained: This gives more flexibility or control to the user. We can give access to all services that would be required and omit others. This is suggested for experienced users.

For beginners, looking to use an LLM model in the project go with the Read access type.

- Click on your profile in the top right corner

- Go to settings

- Click on Access Tokens in the left-side menu

- Click on + Create new token

- Choose your token type(if the token type is fine-grained choose the access that you need)

- Click on “Create token”

Copy and store your token value as it will not be shown again. If you fail to store it, use the invalidate and refresh option for that key to get a new key for the same settings or generate a new key.

Choosing a Foundation model based on the purpose

Foundation models are the core of any Gen-AI development be it using straight away, or fine-tuning them choosing an appropriate base model is the key to success. There are hundreds of LLMs available in Hugging Face, but we need to filter them based on our requirements, complexity and relevancy.

- Purpose: First we need to understand, the purpose of our use. Different models are trained to work differently and are trained on suitable data. For example, if we need to generate images based on the prompt, we should use text-to-image models, as they will be trained extensively. Using a different kind of model for this may throw errors or can’t produce suitable results.

- Complexity or Data source: If the information is available you can check the data it is trained on or the complexity of the model used to understand if it’s over-trained or under-trained for your use case.

- Relevancy: Based on the information, you need to validate if the model is suitable for your project. For example, if you are working on generating illustrations of cats, and the model you choose is trained on dog images only, then it is irrelevant to your use case.

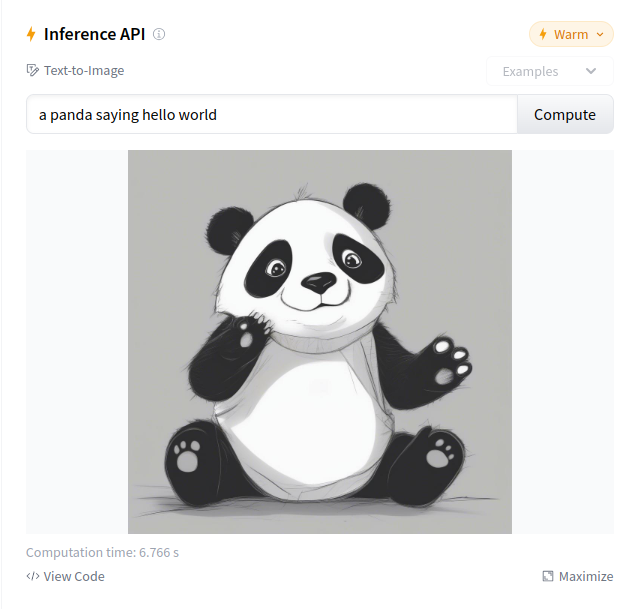

To choose your model, go to the Models tab in Hugging Face and select your model. A quick check you can do in Hugging Face is to run a query in the Inference API and check if the results are relevant.

For understanding let me choose a text-to-image model from Hugging Face: stabilityai/stable-diffusion-xl-base-1.0

Validating that the selected model works as expected:

Using the Model

Now that we have chosen the model, let’s quickly see the easiest way to use the model. Open the model page in Hugging Face.

Refer here for the model used in this article.

- Go to the deploy option in the top right corner

- Select Inference API(Serverless)

- You will get a sample code snippet, copy the Python code

import requests

API_URL = "https://api-inference.huggingface.co/models/stabilityai/stable-diffusion-xl-base-1.0"

headers = {"Authorization": "Bearer hf_xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx"}

def query(payload):

response = requests.post(API_URL, headers=headers, json=payload)

return response.content

image_bytes = query({

"inputs": "Astronaut riding a horse",

})

# You can access the image with PIL.Image for example

import io

from PIL import Image

image = Image.open(io.BytesIO(image_bytes))- Replace your Access Token in the code, so that your request is authorised

- Copy this code and run it in a code editor of your choice

- You can use the output to save the image or proceed further on your project

Done! Congrats you have successfully used an LLM API using Python and Hugging Face.

Conclusion:

You’ve now taken your first steps into the world of Hugging Face and large language models. With just a few lines of code, you can use capabilities that were once only accessible to advanced research teams. This tutorial covers just the basics, but Hugging Face’s platform is rich with possibilities— from fine-tuning models to deploying them on custom applications and leveraging advanced NLP pipelines.

In future posts, we’ll explore how to unlock even more of Hugging Face’s potential, diving into topics like customizing models, optimizing performance, and integrating generative AI into larger projects. Stay tuned for more insights as we continue to explore how Hugging Face can elevate your AI-driven projects to the next level.