Have you ever wondered how machines can process and understand human language?

Machines can process numbers (Ultimately convertible to 1/0), and the process of encoding text as numbers, which can be processed by LLMs is called Vectorization.

Words that make perfect sense to us are nothing more than junk to machines until they’re transformed into something machines can work with (numbers). This transformation process is called vectorization, and it is the backbone of modern natural language processing (NLP) and machine learning, especially in training large language models (LLMs).

In this article, I’ll walk you through what vectorization is, why machines must understand language, and the evolution of vectorization techniques from simple to advanced. By the end, you’ll have a solid understanding of how these techniques work and how they power LLMs like Chat-GPT.

What is Vectorization?

At its core, vectorization is the process of converting text into numbers. Why numbers? Because machines understand numbers, not words. Consider the word “hello.” In a vectorized format, it could look something like this: [0.2, 0.5, 0.3]. These numbers represent specific features of the word, such as its position, usage, or context in a given text.

Why is Vectorization Important?

Without vectorization, machines cannot process language. Words, as we know them, are too abstract for machines to handle directly. Vectorization enables machines to:

- Recognize patterns: Machines can identify similar meanings between words or phrases (Words with similar meanings, can be assigned vectors closer to each other).

- Perform computations: Numbers can be fed into algorithms for training and predictions.

- Preserve relationships: Some methods can capture semantic and syntactic relationships between words.

This numeric representation makes it possible for a machine to process the sentence in ways that mimic human understanding.

Let’s start with some of the simpler techniques for vectorizing text data.

Vectorization Techniques

Bag of Words (BoW)

The Bag of Words model is as simple as it sounds. It represents a text by counting how often each word appears in a document, without paying attention to word order or context.

Example:

Text: I love AI, AI loves me

BoW: {“I”: 2, “love”: 1, “AI”: 2, “loves”: 1, “me”: 1}

BOW Representation:

Let’s consider 3 documents, and see how we can convert them to vectors with a bag of words representation.

- The students helped the teacher learn.

- The teacher helped the students learn

- Vectorization is helpful

Corpus(Unique set of all words): {“The”, “Teacher”, “Helped”, “Students”, “Learn”, “Vectorization”, “Is”, “Helpful”}

| Documents | The | Teacher | Helped | Students | Learn | Vectorization | Is | Helpful |

| The students helped the teacher learn | 2 | 1 | 1 | 1 | 1 | 0 | 0 | 0 |

| The teacher helped the students learn | 2 | 1 | 1 | 1 | 1 | 0 | 0 | 0 |

| Vectorization is helpful | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 1 |

Drawbacks:

- BoW completely ignores the meaning or the order of words.

For example, we treat “The teacher helped the student learn” and “The student helped the teacher learn” as the same, even though their meanings differ. - If more documents are there, the vocabulary size will be very high and the BoW dimensionality will increase, even though the data in a sentence is the same.

In the above example for representing a 3-word sentence, we are using a vector of size 7, and it will increase when additional words are introduced. - We cannot capture Semantic/Grammar information.

Other variants of BoW: N-gram BoW, which has similar drawbacks to BoW, but captures very little context by capturing n consecutive words instead of a single word.

TF-IDF (Term Frequency-Inverse Document Frequency)

TF-IDF builds on BoW by considering how important a word is in the context of an entire document collection. Words that appear frequently in all documents, like “the” or “and,” are down-weighted, while rare but significant words are given more importance.

In a set of documents, the word “data” might appear frequently in one but rarely in others. TF-IDF will assign high weight(Term frequency) in the first document and low weight elsewhere. The words like “the” which appear in every document will be given less weight (Inverse Document Frequency) as they don’t hold any context.

TF-IDF Formula

- Term Frequency (TF):

- Inverse Document Frequency (IDF):

- TF-IDF:

Drawback:

Like BoW, TF-IDF doesn’t account for the order of words or their semantic relationships. But It can assign higher weights to words specific to a document and it can help in clustering the documents that are based on similar topics. However, it can be computationally extensive as vocabulary size will be large.

However these naive methods were not useful as NLP advanced, it became clear that capturing word meaning and context was essential. This led to more advanced techniques like word embeddings and contextualized embeddings.

Word Embeddings

Word2Vec

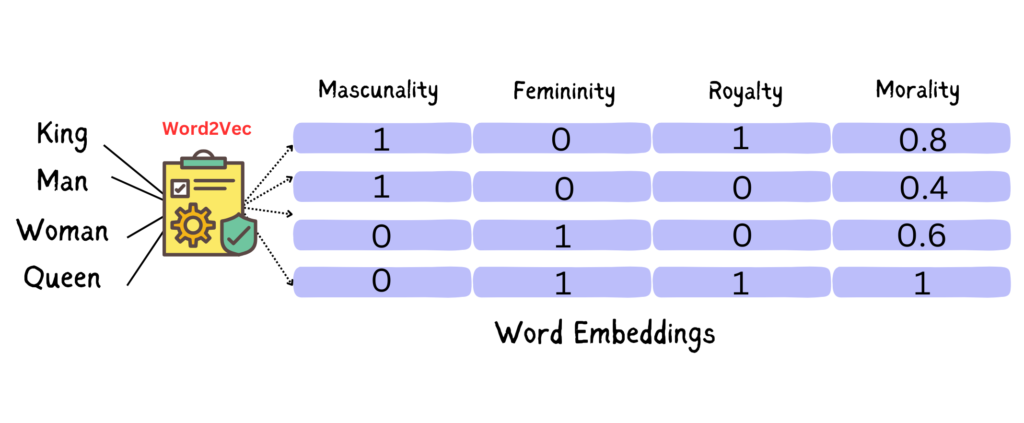

Introduced by Google in 2013, Word2Vec represents words in a continuous vector space(with a pre defined vector size), capturing semantic relationships.

Example: The famous equation, to understand word embeddings: king – man + woman = queen.

Now, even more words can be embedded in to the same vector space, without increasing size, thus overocoming the drawback of Bag of Words approach. Also, Here we are preserving the meaning, context of the words and their relationships.

For example: I will encode as [1, 0, 0.8, 0.8]

However, It doesn’t understand word order or sentence context. We cannot still predict what next word will be in a sentence, as we are looking at word level embeddings.

GloVe (Global Vectors)

To overcome the drawback of Word2Vec, GloVe uses word co-occurrence statistics to create embeddings. Unlike Word2Vec, it balances local (within a sentence) and global (across the corpus) relationships. Hence, it helps us in capturing global relationships compared to Word2Vec. But it has limited contextual understanding (Words will not be differentiated based on their usage/meaning).

In a nut-shell, GloVe will keep words that are similar in meaning together, and words that co-occur in sentences will be kep close. Words that don’t have any relationships will have more distance between them.

Contextualized Embeddings

ELMo (Embeddings from Language Models)

ELMo creates embeddings based on a word’s context within a sentence. For example, the word “bank” in “river bank” vs. “money bank” would have different embeddings. Thus, it understands the context. However, It’s computationally expensive.

Reher here for more on ELMo

Transformers and LLMs (e.g., BERT, GPT)

Transformers revolutionized NLP by introducing attention mechanisms that model relationships between all words in a sentence.

In the sentence “The cat sat on the mat” transformers assign attention weights to each word, allowing the model to understand that “sat” relates more closely to “cat.”

Provides fully contextualized representations, making it ideal for complex tasks like question answering or summarization. However, training transformers requires massive datasets and high computational resources. Refer here for more on Transformers: BERT

Comparison of Vectorization Techniques

| Technique | Strengths | Drawbacks | Use Cases |

| Bag of Words | Simple, interpretable | Ignores context | Text classification, keyword extraction |

| TF-IDF | Balances word importance | Ignores context | Document retrieval, clustering |

| Word2Vec/GloVe | Captures word relationships | No contextual understanding | Sentiment analysis, semantic tasks |

| ELMo/BERT | Fully contextualized understanding | Computationally expensive | Chatbots, translation, LLM training |

Quiz Time

Conclusion

Vectorization bridges the gap between human language and machine understanding. From simple methods like Bag of Words to the advanced contextual embeddings used in LLMs, this evolution has made it possible for machines to achieve near-human performance in language tasks.

Now it’s your turn! Experiment with these techniques in your projects and see how they can transform your NLP workflows.

Responses

[…] For more on feature extraction refer our blog on vectorisation. […]

[…] convert text into meaningful numerical representations, check out this beginner-friendly guide on vectorization in LLMs. This foundational knowledge will help you better understand how RAG systems process and retrieve […]

[…] To learn more on vectorisation refer our guide on Vectorization Simplified for Training LLMs […]

[…] To learn more about how words are transformed into numbers to train models read our blog on Text Vectorisation […]