Introduction

Ever wondered how Netflix can target you with movies that you generally like even though you never watched them before, or how Chat GPT can interpret your question and reply?

The ability to achieve this is the power of Machine Learning, by analyzing a vast amount of raw data and turning them into profitable/useful actionable insights, these mathematical algorithms can derive what you like the most!

Machine Learning (ML) is a subset of artificial intelligence (AI) that allows computers to learn patterns from data and make predictions for unseen cases without being explicitly programmed for each specific task. Machine learning models improve their performance by identifying the trends in the data using mathematical models.

In essence, machine learning models learn from historical data to predict outcomes, classify/group objects, detect anomalies, or generate insights. The more data you provide to these models, the better they become at making accurate predictions.

How Does Machine Learning Work?

At its core, machine learning involves:

- Data: Historical data (supervised or unsupervised) used to train the model.

- Algorithm: A set of mathematical instructions for learning patterns from data.

- Model: We use a set of trained mathematical parameters to make predictions or decisions.

- Evaluation: Assessing how well the model performs using metrics like accuracy, precision, or recall.

Types of Machine Learning Algorithms

Machine learning algorithms are broadly categorized into three main types:

1. Supervised Learning

We train the algorithm on a labelled dataset in supervised learning, meaning we know each training example and its correct output. The model learns to map inputs to outputs and can then predict outcomes on new, unseen data. We call it a regression if the label is continuous, and a classification problem if the label is categorical (can be classified).

- Examples of Supervised Learning Algorithms:

- Linear Regression: Predicts a continuous output based on input features.

- Logistic Regression: Used for binary classification tasks.

- Support Vector Machines (SVM): Classifies data by finding the best non-linear decision boundary.

- Decision Trees and Random Forests: Tree-based models used for classification and regression.

- K-Nearest Neighbors (KNN): Classifies data points based on the closest data points in the training set.

2. Unsupervised Learning

We give the algorithm a dataset without labelled outcomes in unsupervised learning. The goal is to find hidden patterns or structures within the data. We often use it for clustering, association problems, and identifying anomalies.

- Examples of Unsupervised Learning Algorithms:

- K-Means Clustering: Groups similar data points together.

- Hierarchical Clustering: Builds a hierarchy of clusters.

- Principal Component Analysis (PCA): Maps higher dimensional data into lower dimensional data, by preserving certain attributes.

- Anomaly Detection: Identifies unusual data points that don’t conform to expected patterns.

3. Reinforcement Learning

In reinforcement learning, there will be a series of trial and error, with continuous feedback to penalize/reward based on the objective. The goal is to maximize the total reward based on the action taken. We commonly use this approach in robotics, gaming, and autonomous systems to choose the best course of action.

- Examples of Reinforcement Learning Algorithms:

- Autonomous driving cars: Reinforcement learning enhances decision-making in autonomous driving cars by providing multiple options during driving.

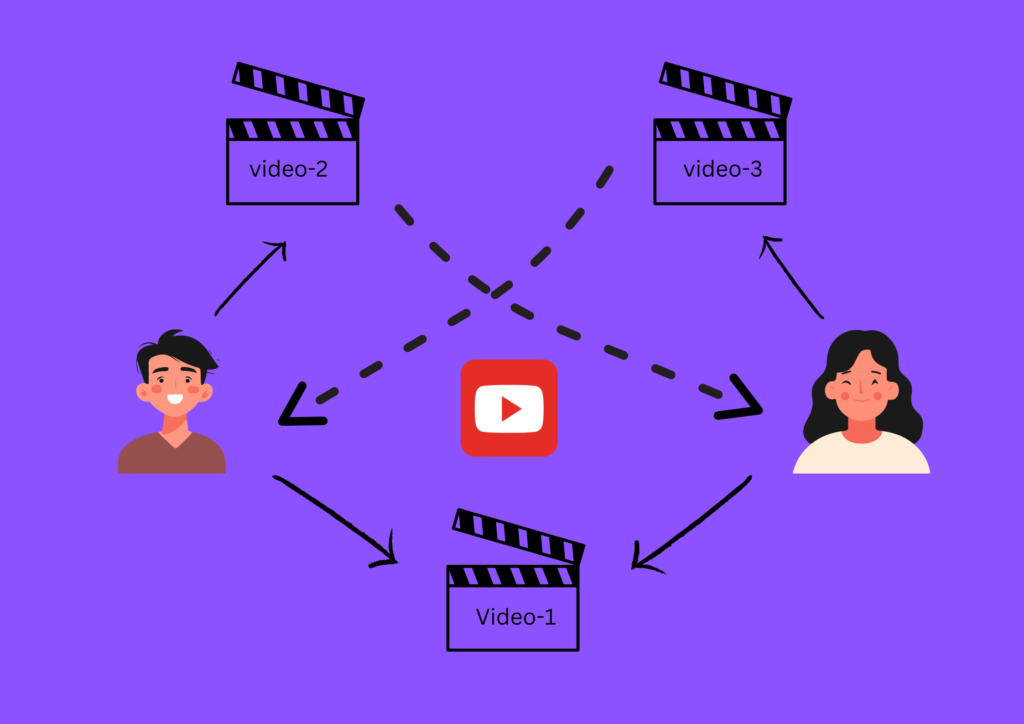

- Recommendation systems: Recommender systems continuously collect feedback/data from users to refine their suggestions.

Fun Facts for Beginners Entering the Field

Data is the New Oil: Just as oil powered the industrial revolution, we see data fueling the machine learning revolution.

A Brief History of Machine Learning

1950s – The Birth of Machine Learning

The foundations of machine learning trace back to the 1950s:

- Alan Turing, the British mathematician and pioneer of AI, proposed the “Turing Test” in 1950 as a measure of a machine’s ability to exhibit intelligent behaviour indistinguishable from a human.

- In 1952, Arthur Samuel developed one of the first machine learning programs, a checkers-playing algorithm that improved its strategy by learning from experience.

1980s – The Rise of Neural Networks

The concept of neural networks gained traction during this time. These algorithms were inspired by the human brain and used layers of “neurons” to process data.

- Backpropagation, a technique to improve neural networks, was introduced, allowing models to adjust weights through multiple layers and achieve better performance.

1990s – Machine Learning Meets Data

With the rise of the internet, vast amounts of data became available, allowing machine learning algorithms to improve significantly.

- Support Vector Machines (SVM) and ensemble methods like Random Forests gained popularity due to their high accuracy and ability to handle complex data.

2000s to Present – The Deep Learning Revolution

The 2000s saw a breakthrough in Deep Learning, a subset of machine learning that uses deep neural networks with many layers of neurons.

- In 2012, AlexNet, a deep convolutional neural network, won the ImageNet competition, a milestone in computer vision, sparking immense interest in deep learning.

- Modern deep-learning techniques have powered innovations like speech recognition, image processing, and autonomous vehicles.

Recent Developments in Machine Learning

Machine learning has made tremendous strides in the past decade. Here are some of the latest advancements:

1. Generative AI

Generative models, like GPT-4 and DALL-E, use deep learning to create new content, such as text, images, or even music. These models have revolutionized fields like content creation, entertainment, and design.

Have you tried the latest Chat GPT model yet, it has the capability to give results from live search – Try it now

2. Natural Language Processing (NLP)

NLP has seen significant improvements, enabling machines to understand and generate human language more effectively. Chatbots, virtual assistants (like Siri, and Google Assistant), and translation services are powered by advanced NLP models like BERT and Transformer-based models.

3. Explainable AI (XAI)

As machine learning models become more complex, there is a growing need for transparency. Explainable AI aims to make black-box models more interpretable, allowing users to understand the rationale behind a model’s predictions.

4. Reinforcement Learning in Gaming

Reinforcement learning has been successfully applied to gaming. Notable achievements include AlphaGo by DeepMind, which beat world champion Go players, and OpenAI’s Dota 2 bot, which defeated professional gamers.

5. AutoML

Automated Machine Learning (AutoML) tools simplify the machine learning workflow by automating tasks like feature engineering, model selection, and hyperparameter tuning. This allows non-experts to build effective models.

Let’s dive into the world of Machine Learning and unlock the future of AI.

4 responses to “Unlock the Future of AI: Machine Learning 101”

[…] < previous […]

[…] < previous Next > […]

[…] making a machine learning model it is very important to keep a perfect balance between bias and variance. This will help to […]

[…] Generative AI relies heavily on the principles of machine learning to function efficiently. If you’re eager to dive deeper into the world of machine learning and understand the key concepts that make these innovations possible, check out our detailed guide, ‘Unlock the Future of AI: Machine Learning 101.’“ […]