Loss functions allow us to know how well the predictions made by the model match with the actual outcome. Choosing an appropriate loss function can definitely help you boost the performance of your model.

In this guide, we’ll explore the various types of loss functions, how they are utilized in machine learning, and their importance in optimization. Additionally, we’ll share real-world examples, research, and practical tips to help you see how loss functions impact model performance.

Table of Contents

- What Are Loss Functions?

- Types of Loss Functions in Machine Learning

- Real-World Examples of Loss Functions

- How to Create a Custom Loss Function

- Why Loss Functions Matter in Model Optimization

- Practical Takeaways

- FAQs About Loss Functions

- Conclusion

What Are Loss Functions?

In machine learning, a loss function is used to measure how well a model’s predictions match the actual outcomes. In other words, It’s like a scorecard that tells us how far off our model is. The goal is to make sure this score is as low as possible by adjusting the model’s parameters.

For example, in supervised learning, the loss function helps us to compare predicted values with the actual values. It allows us to track the model’s performance. If the loss is small, then the model is working great. On the other hand, a high loss means there is a need for improvement.

As we dive deeper into how loss functions guide optimization, one key challenge that often arises is when the model becomes too complex or too simple. This, in turn, can lead to issues like overfitting, where the model fits the training data too well, or underfitting, where the model fails to capture important patterns. Understanding how loss functions work can help you fine-tune your model and avoid these pitfalls.

Want to dive deeper into this topic? Check out our post on Overfitting vs. Underfitting: How to Optimize your Machine Learning Model to get insights on balancing model complexity for optimal performance.

Types of Loss Functions in Machine Learning

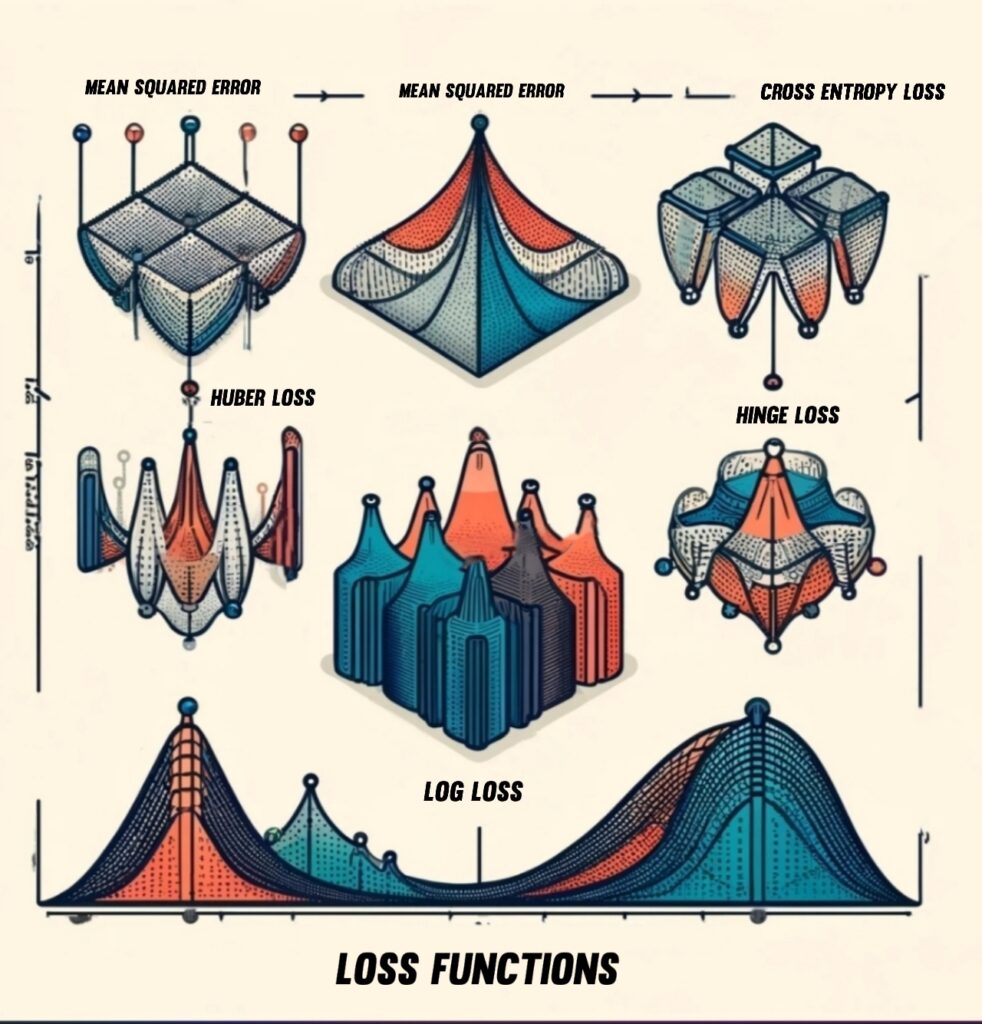

There are many types of loss functions, each suited to specific tasks. Essentially, they can be split into two categories:

- regression

- classification.

Regression Loss Functions

Regression models are used when we want to predict continuous values, such as prices or temperatures. Two common loss functions used in regression are:

- Mean Squared Error (MSE): This function calculates the square of the difference between the predicted and actual values. As a result, it’s widely used because it tends to penalize large errors more heavily. However, one downside is that it’s very sensitive to outliers, meaning extreme values can significantly affect the result.

Where:

- n = number of observations

- Yi = actual value of the i th observation

- y^i = predicted value of the i th observation

- Mean Absolute Error (MAE): In contrast to MSE, MAE calculates the absolute difference between predicted and actual values. Since it doesn’t square the errors, MAE is less sensitive to outliers and may provide a better measure of accuracy in datasets with extreme values.

Where:

- n = number of observations

- Yi = actual value of the i th observation

- y^i = predicted value of the i th observation

Some times the target variable would have a large standard deviation, then the MSE/MAE may not be a good pick as it doesn’t generalise better, then considering percentage errors may be a good option for us.

Classification Loss Functions

Classification models are used when the goal is to assign labels to data, like identifying whether an email is spam or not. The two most common loss functions here are:

- Cross-Entropy Loss: Often used for multi-class classification tasks, cross-entropy measures the difference between the true probability distribution and the predicted one. In other words, It tells us how well the model’s predictions match the actual probabilities. Thus, The lower the cross-entropy, the better the model is at making correct predictions. Good choice for imbalanced datasets!

- Hinge Loss: This loss function is typically used in support vector machines (SVMs). It works by focusing on the margin between classes. In other words, it penalizes the model if it doesn’t maintain a large enough margin between the predicted labels.

Real-World Examples of Loss Functions

To better understand how loss functions work, let’s look at a couple of real-world examples:

Example 1: Predicting House Prices (Regression with MSE)

Let’s say you’re creating a model to predict house prices. You use details like the size of the house, the number of bedrooms, and the neighborhood to make predictions.

How It Works:

- Your model predicts a price for each house, but it won’t be perfect at first. In this case, MSE measures how far off the predicted price is from the actual price, squaring the difference to give more weight to big errors.

- MSE measures how far off the predicted price is from the actual price, squaring the difference to give more weight to big errors.

- For example:

- If a model predicts ₹40,00,000 instead of ₹50,00,000, the squared error becomes ₹1,00,00,00,000, heavily penalizing large mistakes.

- But if the model predicts₹48,00,000, the error is only₹2,00,000. Squaring this gives₹4,00,00,000.

Big errors are penalized much more than small ones, so the model learns to avoid them.

Outcome: Over time, the model adjusts to give better predictions for most houses and getting closer to their actual prices.

Example 2: Handwritten Digit Recognition (Classification with Cross-Entropy Loss)

Imagine you’re teaching a computer to recognize handwritten numbers, like those on cheque amounts. In this case, the computer looks at an image and tries to guess if it’s a “0,” “1,” or any digit up to “9.”

How It Works:

- The model predicts how likely each digit is based on the image. For example, it might say:

- “I’m 70% sure this is a 5, 20% sure it’s a 3, and 10% sure it’s an 8.”

- For instance, if the correct digit is “5,” cross-entropy checks how confident the model is about that answer.

- If the model is very confident (e.g., 70%), the penalty is small.

- On the other hand, If it’s more confident about the wrong digit, the penalty is much larger.

- The model learns by adjusting itself to be more confident in the correct digits over time.

Outcome: After training, the computer becomes very good at guessing the right number for most images, making fewer mistakes.

These examples show just how important it is to choose the right loss function. The wrong choice can lead to poor model performance, while the right one can drastically improve the results.

How to Create a Custom Loss Function

In some cases, standard loss functions like Mean Squared Error (MSE) or Cross-Entropy Loss may not be the best fit for your model. Instead, Custom loss functions allow you to tailor the loss calculation to your specific problem. Let’s explore how to create one in TensorFlow.

Custom MAE-Based Loss Function

import tensorflow as tf

def weighted_mae(y_true, y_pred):

error = tf.abs(y_true - y_pred) # Calculate the absolute error

weighted_error = tf.where(error > 1.0, error * 1.5, error) # Penalize larger errors more

return tf.reduce_mean(weighted_error) # Return the mean weighted error

Explanation:

- This function customizes Mean Absolute Error (MAE) by applying extra weight to larger errors.

- First, it calculates the absolute error between the true values (

y_true) and predicted values (y_pred). - If the error exceeds 1.0, it multiplies the error by 1.5 to penalize larger mistakes more than smaller ones.

- Finally, the function computes the mean of all weighted errors to return the custom loss.

Huber Loss (Combination of MSE and MAE)

Huber loss is useful when you want to balance sensitivity to large errors while reducing the effect of outliers. It behaves like MSE for small errors and like MAE for larger ones.

import tensorflow as tf

def huber_loss(y_true, y_pred, delta=1.0):

error = y_true - y_pred # Calculate the error

is_small_error = tf.abs(error) <= delta # Check if the error is small

squared_loss = 0.5 * tf.square(error) # Apply squared loss for small errors

linear_loss = delta * (tf.abs(error) - 0.5 * delta) # Apply linear loss for larger errors

return tf.reduce_mean(tf.where(is_small_error, squared_loss, linear_loss)) # Return the mean loss

Explanation:

- Huber loss is a hybrid function that behaves like Mean Squared Error (MSE) for small errors and like Mean Absolute Error (MAE) for larger ones.

- It calculates the error between the true and predicted values.

- If the absolute error is smaller than a threshold (

delta), it uses squared loss (similar to MSE). - For errors larger than

delta, it switches to linear loss (similar to MAE), reducing sensitivity to extreme values. - Finally, it returns the mean loss based on the type of error.

Using a Custom Loss Function in a Model

Once you’ve created a custom loss function, you can easily integrate it into a TensorFlow/Keras model.

model.compile(optimizer='adam', loss=weighted_mae, metrics=['mae'])

Explanation:

- The

model.compile()function sets up the model for training. In this case:optimizer='adam': You’re using the Adam optimizer, a popular choice due to its efficiency in training deep learning models.loss=weighted_mae: Here, you’re specifying the custom loss function (weighted_mae) you created earlier.metrics=['mae']: You’re also tracking the Mean Absolute Error (MAE) as a performance metric during training. This helps you monitor the model’s accuracy.

By defining your own loss functions, you gain more control over how the model learns and adapts, which can be essential for tackling specific challenges in your data or task.

Why Loss Functions Matter in Model Optimization

Without a clear loss function, there’s no way to track progress or identify mistakes—like trying to get better at something without knowing how you’re doing. A good loss function not only guides improvements but also helps measure success over time.

Moreover, Loss functions are essential for improving predictions. They help measure the difference between predicted and actual values. The aim is to minimize this difference, which leads to better results.

Practical Takeaways

- Choose the Right Loss Function for Your Task

If you are working on regression tasks, Mean Squared Error (MSE) or Mean Absolute Error (MAE) will be the great choices because they show how far off the predictions are from the actual values. In contrast, For classification tasks, Cross-Entropy Loss is a better fit as it compares predicted probabilities with the actual class labels.

- Try Different Loss Functions

Different loss functions can work better for different datasets and models. For example, Hinge Loss is often used in Support Vector Machines, while Binary Cross-Entropy is used mostly for binary classification. Trying out different loss functions can help you improve your model’s performance.

- Understand the Impact of Loss Functions on Training

The loss function helps the model understand if it is doing well or not and what adjustments are needed. As a result, By selecting the right loss function, you can improve the accuracy of the model and speed up the learning process.

FAQs About Loss Functions

What is the difference between MSE and MAE?

- MSE squares the errors, making it more sensitive to outliers. It’s ideal when large mistakes need heavy penalties.

- On the other hand, MAE calculates the absolute difference between predicted and actual values, making it more robust to outliers.

Why do we use cross-entropy loss?

Cross-Entropy Loss is usually the best choice for classification because it helps optimize predicted class probabilities, especially in multi-class problems.

Which loss function is best for classification?

For most classification tasks, Cross-Entropy Loss is the best choice, particularly when you’re working with models that output probabilities (e.g., softmax outputs in neural networks). This is because it helps optimize the probability distribution over classes, encouraging the model to output high probabilities for the correct class and low probabilities for incorrect ones.

- For example, if you’re building a spam classifier, cross-entropy loss will guide your model to better predict whether an email is spam or not.

Can loss functions be customized for specific needs?

Yes! Custom loss functions can be created when your problem requires a unique penalty mechanism. For instance, you might create a custom loss to penalize large errors differently or integrate domain-specific knowledge into the loss calculation.

- For example, if you’re working with data that has known outliers (like predicting sales for rare, large-ticket items), you can create a loss function that reduces the penalty for those outliers, improving model performance.

How do I choose the right loss function for my problem?

The choice depends on your problem type and data. For classification, Cross-Entropy Loss is the standard, but you might experiment with others like Hinge Loss for support vector machines or Focal Loss for imbalanced classes.For regression, use MSE for general tasks, and MAE for datasets with outliers.

Conclusion

Loss functions play a pivotal role in machine learning by guiding the optimization process and improving the accuracy of your models. In addition, choosing the right loss function—whether for regression or classification tasks—can make all the difference in model performance. Therefore, remember, a well-chosen loss function is key to reducing errors and ensuring your model makes accurate predictions.

As you move forward, experiment with different loss functions and adjust them based on the specifics of your dataset. This will help you fine-tune your models and achieve better results.

What’s your experience with selecting the right loss function? Have you faced challenges in finding the best one for your projects? Feel free to share your thoughts and questions in the comments below!